You may be thinking that a Tensor is probably a mysterious and complicated thing, used exclusively in Machine Learning algorithms. But in reality, a Tensor is something quite simple and common.

So, what is a Tensor?

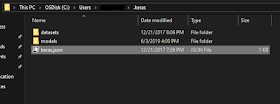

|

| A representation of a Tensor Image courtesy: Wikipedia - https://commons.wikimedia.org/wiki/File:Components_stress_tensor.svg |

A Tensor is simply a data structure. A much more generic representation of any of the data collections we see in programming languages. However, unlike any other data structures, typically the position of a value in a Tensor has a meaning (we'll get to that in a bit).

A Tensor may have zero or more dimensions, which is given as the 'Order' of a Tensor.

A scalar, or a single number is a Tensor of order zero, which can also be thought as an array of dimension zero.

A Tensor of order one would be a Vector, or a one-dimensional array.

A Tensor of order two would be a matrix, or a two-dimensional array.

And so on... Tensors of any order can be made.

As I mentioned above, in typical use cases, the position of a value in a tensor - commonly referred to as the coordinates - has a meaning, and the value would transform according to the rules we define when the coordinates are changed.

For an example, we can define a tensor of order two which contains the pixel values of a grayscale image. In this case, the coordinates of the values of the tensor would represent the X and Y coordinates of the pixels of the image, and the values themselves would be the grayscale values at those coordinates. And, as you expect, if you transform the coordinates, the values would also change.

Related links:

http://www.physlink.com/education/askexperts/ae168.cfm

https://en.wikipedia.org/wiki/Tensor

http://mathworld.wolfram.com/Tensor.html

Build Deeper: Deep Learning Beginners' Guide is the ultimate guide for anyone taking their first step into Deep Learning.

Get your copy now!