AlexNet - 2012

|

| The AlexNet Architecture (Image from the research paper) |

- Proved that Convolutional Neural Networks actually works. AlexNet - and its research paper "ImageNet Classification with Deep Convolutional Neural Networks" by Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton - is commonly considered as what brought Deep Learning in to the mainstream.

- Won 2012 ILSVRC (ImageNet Large-Scale Visual Recognition Challenge) with 15.4% error rate. (For reference, the 2nd best entry at ILSVRC had 26.2% error rate).

- 8 layers: 5 convolutional, 3 fully connected.

- Used ReLU for the non-linearity function rather than the conventional tanh function used until then.

- Introduced the use of Dropout Layers, and Data Augmentation to overcome overfitting.

ZF Net - 2013

|

| The ZF Net Architecture (Image from the research paper) |

- Winner of ILSVRC 2013 with an error rate of 11.2%.

- Similar to the AlexNet architecture, with some tweaks and fine tuning to improve the performance.

- Introduced the Deconvolutional Network (a.k.a. DeConvNet), a visualization technique to view the inner workings of a CNN.

VGG Net - 2014

|

| The VGG Net Architecture (Image from the Keras Blog) |

- Won the "Classification+localization" category of the ILSVRC 2014 (Not the overall winner), with an error rate of 7.3%.

- The VGG architecture worked well with both image classification and localization.

- 19 Layer network, with 3x3 filters. (Compared to 11x11 filters of AlexNet, and 7x7 filters of ZF Net).

- Proved that simple deep structures works for hierarchical feature extraction.

GoogLeNet - 2014/2015

|

| The GoogLeNet Architecture (Image from the research paper) |

- The winner of ILSVRC 2014 with an error rate of 6.7%.

- Introduced the Inception Module, which emphasized that the layers of a CNN doesn't always have to be stacked up sequentially.

|

| The Inception Module (Image from the research paper) |

- 22 blocks of layers (over 100 layers when considered individually).

- No Fully connected layers.

- Proved that optimized non-sequential structures may work better than sequential ones.

Microsoft ResNet - 2015

|

| The ResNet Architecture (Image from the research paper) |

- Won ILSVRC 2015.

- With an error rate of 3.6%, the ResNet has a higher accuracy than a human (A typical human is said to have an error rate of about 5-10%).

- Ultra-deep (quoting the authors) architecture with 152 layers.

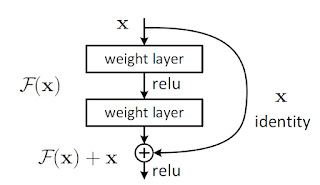

- Introduced the Residual Block, to reduce overfitting.

|

| The Residual Block (Image from the research paper) |

With Deep Learning models starting to surpass human abilities, we can be sure to see more interesting Deep Learning models, and achievements in the coming years.

Is Deep Learning just CNNs?

Now, looking back at our list above, you might be wondering whether "Deep Learning" is just Convolutional Neural Networks.

No.

Actually, all of the following models are considered Deep Learning.

- Convolutional Neural Networks

- Deep Boltzmann Machine

- Deep Belief Networks

- Stacked Autoencoders

Related posts:

What is Deep Learning?

Related links:

https://adeshpande3.github.io/adeshpande3.github.io/The-9-Deep-Learning-Papers-You-Need-To-Know-About.html

http://karpathy.github.io/2014/09/02/what-i-learned-from-competing-against-a-convnet-on-imagenet/

Build Deeper: The Path to Deep Learning

Learn the bleeding edge of AI in the most practical way: By getting hands-on with Python, TensorFlow, Keras, and OpenCV. Go a little deeper...

Get your copy now!

No comments:

Post a Comment